By Mollie Barnes

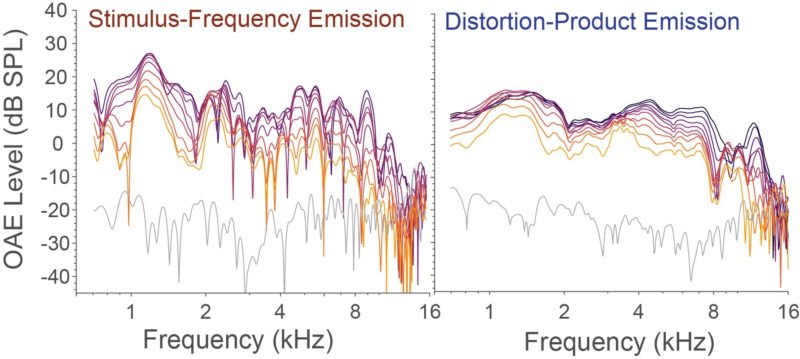

Two types of otoacoustic emissions recorded from the cochlea of a normally hearing human subject across five octaves and multiple stimulus levels (denoted by color). Image Credit: Carolina Abdala, Ph.D.

If you get really quiet, and enter an almost meditative state, you just might be able to hear yourself hearing. Well, that is you might be able to if you’re sitting in a hearing laboratory using specialized recording equipment for a study on otoacoustic emissions.

While they might not be detectable by the human ear, otoacoustic emissions are soft sounds that are generated by the ear as it listens to sound. First discovered by physicist David Kemp in 1978, these sounds are produced by the cochlea, the hearing organ of the inner ear. Otoacoustic emissions (OAEs) are sounds the cochlea makes while it codes and transduces sound.

OAEs are exciting in the medical world because they provide an objective test of hearing, rather than a subjective, “Can you hear me now?” type test. Currently they are used in audiology clinics to determine if someone has significant hearing loss or not. If a patient has profound sensory hearing loss, these emissions aren’t detected.

A team of researchers at the USC Caruso Department of Otolaryngology – Head and Neck Surgery seeks to do more with these sounds than just determine whether someone can hear or not. They seek to determine what is causing hearing loss based on the differences in these small emissions.

For an analogy on how it works, Carolina Abdala, PhD, principal investigator on the study, compares the OAE test they are developing to a smog emissions test.

“In California, we need to take smog tests in order to have our car assessed,” says Professor Abdala. “They’re not going to take apart the engine to look inside. Auto mechanics look at the exhaust emissions, and say, ‘The exhaust shows us that we have a clean car that’s working well enough to produce relatively little pollution.’ Emissions from the ear are kind of like that. They come out of the ear, and we record them to find out about cochlea.

“We can’t get to the cochlea,” she says. “It’s embedded in the hardest bone in the body, the temporal bone, and we can’t access it directly. In humans, we can’t currently image the cochlea for clinical purposes. So these otoacoustic emissions are one of the only measures that tell us what’s going on inside the inner ear.”

Hearing loss can be caused by a variety of things, including Meniere’s Disease, noise exposure, aging, ototoxic drugs (drugs that harm hearing), and genetic factors. But even if someone’s hearing loss is caused by something different, in the clinic all of these types of hearing losses are often treated the same. That’s why Professor Abdala decided to apply for a National Institute of Health grant to find out if differences in these emissions can predict the underlying cause of hearing loss. If successful, clinics will be able to differentially diagnose and treat patients based on the cause or source of their hearing loss.

The way the audiogram, which tests for hearing loss, is set up now, there is no distinction between someone who has hearing loss due to noise exposure from the military or someone who has hearing loss that’s simply due to aging.

“We were frustrated that we have developed all these techniques to measure otoacoustic emissions in research, yet in the clinic they are still doing a crude, abbreviated application of the test,” Professor Abdala says. “That’s why we are taking the incredible, advanced methodology developed after 30 years of research and translating it into something useful for the clinic with this study. Our hope is that this study will help create targeted interventions, such as specific hearing aids to treat auditory deficits for a particular etiology of hearing loss, rather than using a catch-all approach for all hearing losses of a similar degree.”

Despite setbacks in starting their research during the pandemic, the team has already found and presented some preliminary findings suggesting that there may be differences in the emissions of people who have hearing loss due to aging versus noise exposure.

Professor Abdala credits this to their comprehensive OAE test.

“We saw a promising difference between these groups because we do such a detailed assessment across five octaves and multiple levels,” she says. “We have the chance of seeing differences where others may not, because we cover such a broad space with our detailed methods.

After the test, comes the analysis, which is being led by the Co-Chief Principal Investigator and Professor Christopher Shera.

“Now that we’ve made otoacoustic measurements in hundreds of subjects we can begin to look for patterns that might distinguish different kinds of hearing loss,” Professor Shera says. “Our analysis will look for patterns using both well-established statistical methods and modern methods based on machine learning.”

But the detailed test is also going to be the biggest challenge further down the road. Ultimately, a two-hour comprehensive auditory test is not possible in audiology clinics, who have limited time with patients.

“The translation goal is to abbreviate the test so that it takes only ten minutes or so,” Professor Abdala says. “We’re going to try to pare it down to something that can be used in the clinic once we have completed data collection; and that’s going to happen using some sophisticated statistics to optimize differential diagnosis.”

But that won’t happen until the tail end of the study.

“It’s a lofty goal, and I don’t know if we’re going to be able to achieve all of it,” Professor Abdala says. “But if we can distinguish between hearing losses produced by different underlying pathologies, it could create new paths of intervention. It’s exciting to push the limits of what otoacoustic emissions can do.”